Modeling Dynamics over Meshes with Gauge Equivariant Nonlinear Message Passing

Abstract: Data over non-Euclidean manifolds, often discretized as surface meshes, naturally arise in computer graphics and biological and physical systems. In particular, solutions to partial differential equations (PDEs) over manifolds depend critically on the underlying geometry. While graph neural networks have been successfully applied to PDEs, they do not incorporate surface geometry and do not consider local gauge symmetries of the manifold. Alternatively, recent works on gauge equivariant convolutional and attentional architectures on meshes leverage the underlying geometry but underperform in modeling surface PDEs with complex nonlinear dynamics. To address these issues, we introduce a new gauge equivariant architecture using nonlinear message passing. Our novel architecture achieves higher performance than either convolutional or attentional networks on domains with highly complex and nonlinear dynamics. However, similar to the non-mesh case, design trade-offs favor convolutional, attentional, or message passing networks for different tasks; we investigate in which circumstances our message passing method provides the most benefit.

Paper

Published at Advances in Neural Information Processing Systems 2023 (NeurIPS 2023)

*Equal Advising

Khoury College of Computer Sciences

Northeastern University

Idea

This work studies the problem of predicting complex dynamics over surface meshes.

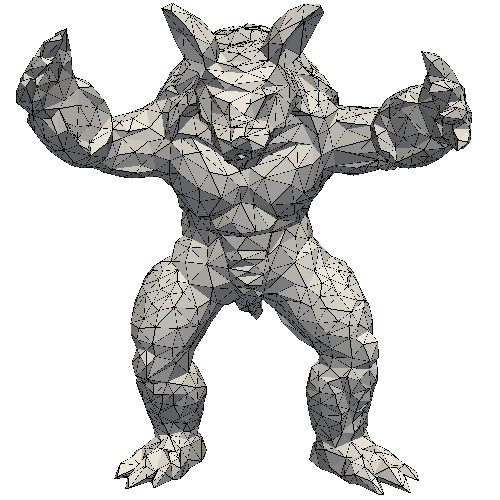

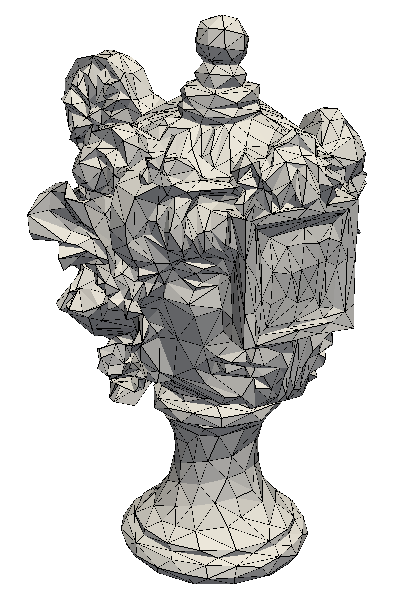

Meshes are a commonly used representation of non-Euclidean manifolds. Compared to other 3D representations such as point clouds or voxels, only meshes can represent both the topology and geometry of the manifold.

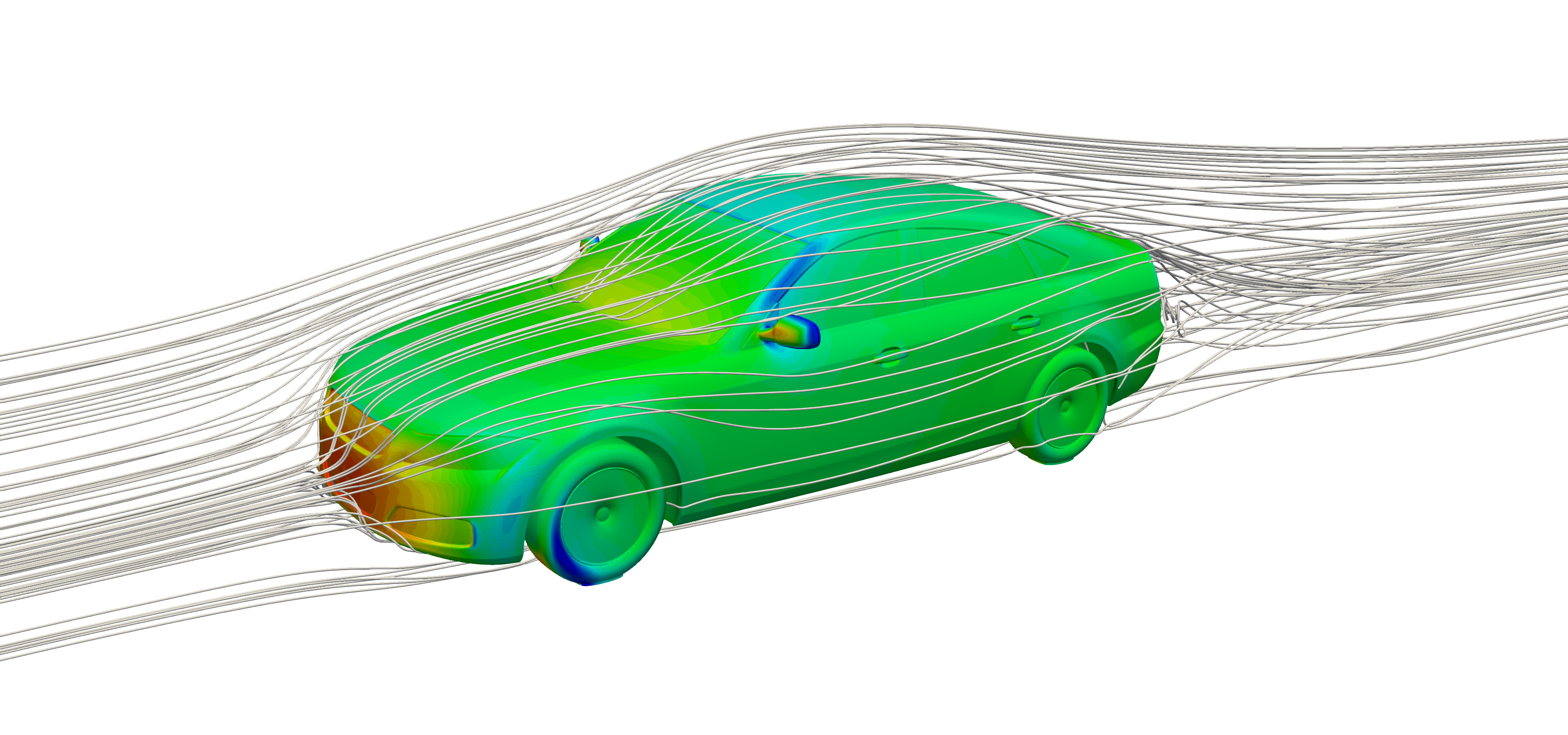

Solving partial differential equations (PDEs), specifically over a surface, is an important task in many domains such as aerodynamics or thermodynamics.

Several approaches to learn signals over meshes often reduce it to a graph, discarding important geometric information. Verma et al., 2018 and De Haan et al., 2020 have shown that not incorporating the intrinsic geometry of the mesh leads to reduced performance.

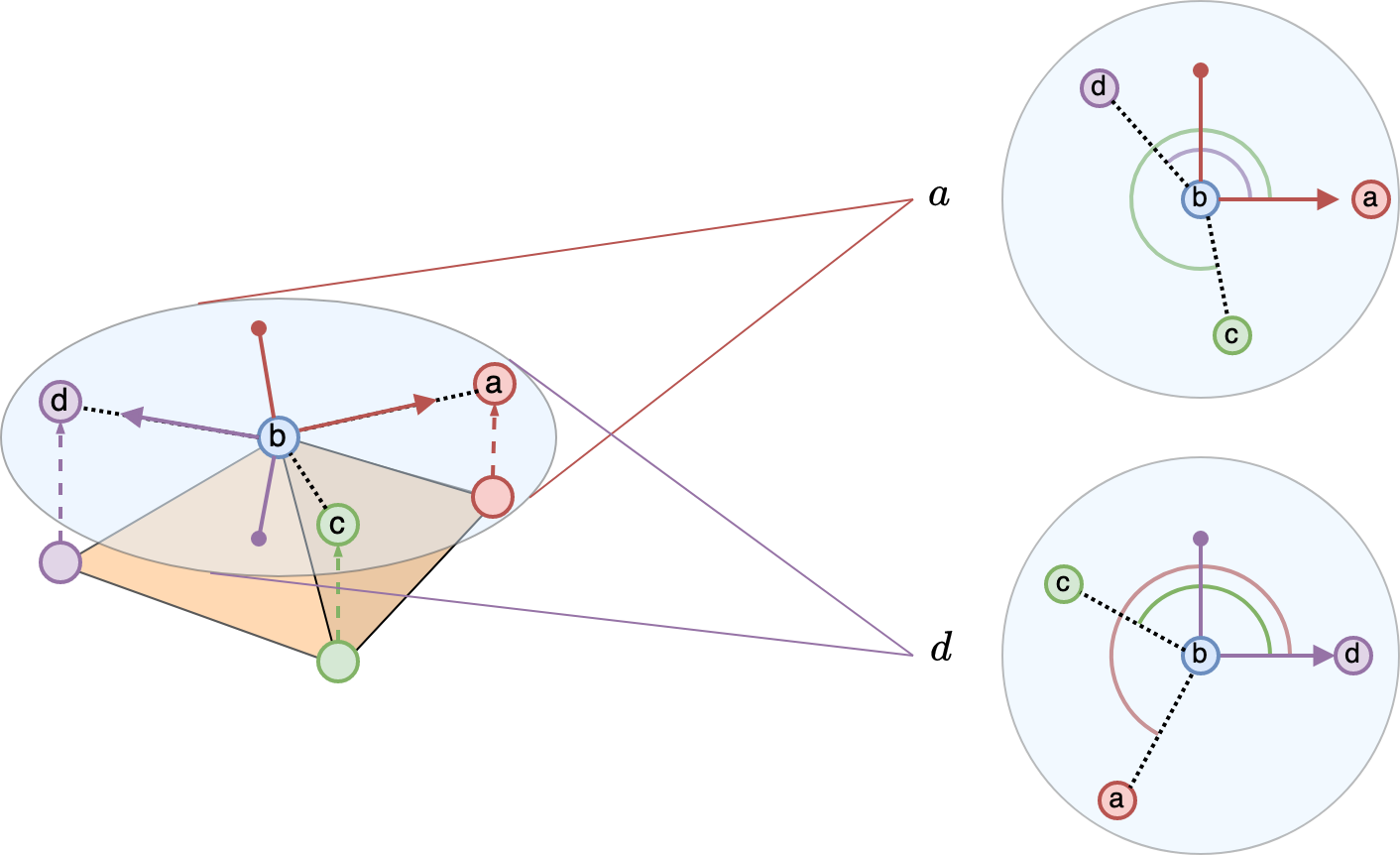

A key geometric aspect of a mesh is gauge symmetry. In the above figure, let’s focus on vertex \(b\) and its neighbors. After we project the neighbors onto the tangent plane at vertex \(b\), there is ambiguity in the choice of local reference or gauge. For example, we could choose vertex \(a\) to be the reference neighbor and we would be able to compute the angles or orientations for the other neighbors. If we choose vertex \(d\) as the gauge, we would obtain a different set of orientations. Since the choice of local gauge was arbitrary, networks should be independent to this choice and should therefore be equivariant to local gauge transformations. In this work, we focus on gauge equivariant neural networks.

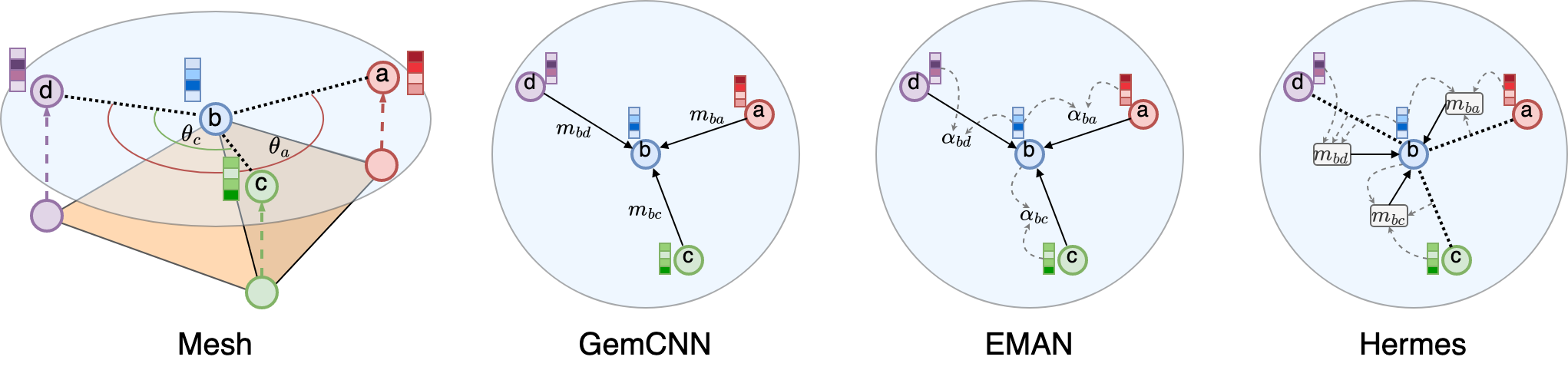

Previous works on gauge equivariant networks for meshes considered convolutional (GemCNN) and attentional (EMAN) architetures. However, in the context of modeling complex dynamics on meshes, such as solving surface PDEs, we find that they underperform.

We propose combining gauge equivariance and nonlinear message passing and name our new architecture Hermes.

Previous works on gauge equivariant networks for meshes considered convolutional (GemCNN) and attentional (EMAN) architetures. However, in the context of modeling complex dynamics on meshes, such as solving surface PDEs, we find that they underperform.

We propose combining gauge equivariance and nonlinear message passing and name our new architecture Hermes.

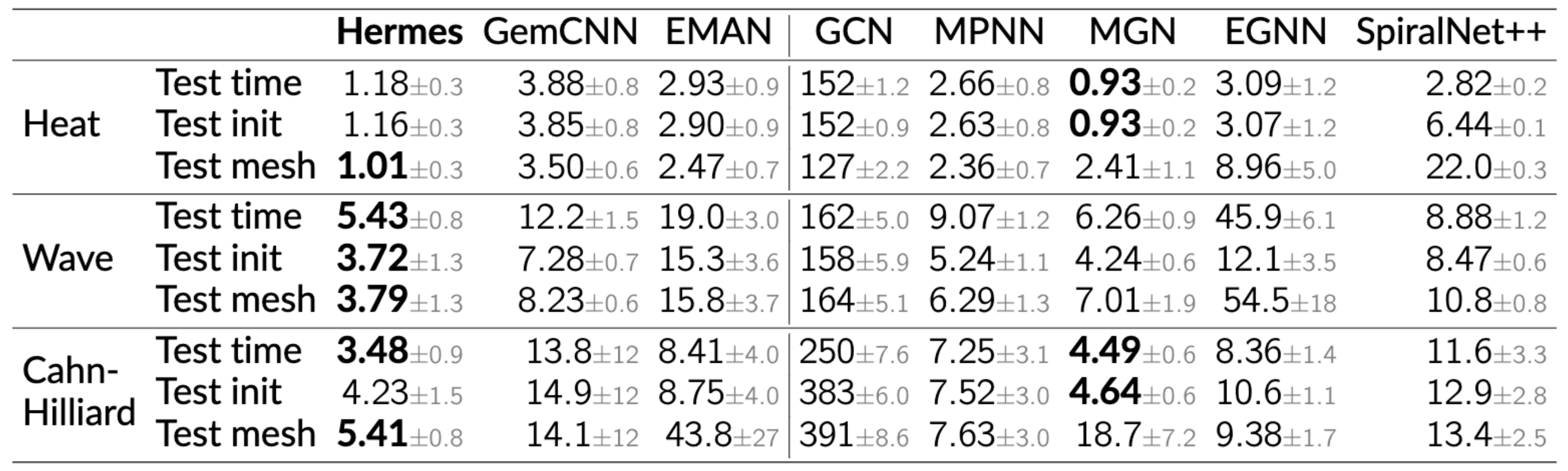

We evaluate Hermes on several domains such as surface PDEs, cloth dynamics, object interactions, and also shape correspondence. For the surface PDEs, we consider 1) Heat, 2) Wave, and 3) Cahn-Hilliard. Heat and Wave are second-order linear PDEs, while Cahn-Hilliard is a fourth-order nonlinear PDE. Cahn-Hilliard describes phase separation in a binary fluid mixture. We also test on generalization to future timesteps (test time), to unseen initial conditions (test init), and to unseen meshes (test mesh).

Hermes outperforms convolutional and attentional counterparts significantly, and outperforms other baselines in most settings. In particular, it performs the best on the test mesh datasets, suggesting that it can learn the true dynamics function and overfits less to specific mesh geometries.

Hermes outperforms convolutional and attentional counterparts significantly, and outperforms other baselines in most settings. In particular, it performs the best on the test mesh datasets, suggesting that it can learn the true dynamics function and overfits less to specific mesh geometries.

Here are some qualitative samples generated autogressively for 50 timesteps given only the initial conditions. GemCNN diverges on Heat and EMAN diverges on Cahn-Hilliard, while Hermes gives fairly realistic rollouts.

Video

Code

https://github.com/jypark0/hermes

Citation

@inproceedings{

park2023modeling,

title={Modeling Dynamics over Meshes with Gauge Equivariant Nonlinear Message Passing},

author={Park, Jung Yeon and Wong, Lawson L.S. and Walters, Robin},

booktitle={Advances in Neural Information Processing Systems (NeurIPS)},

year={2023},

url={https://arxiv.org/abs/2310.19589}

}

Contact

If you have any questions, please feel free to contact Jung Yeon Park at park[dot]jungy[at]northeastern[dot]edu.